Integration of FLUX Fill Redux ACE Workflow for Universal Migration: Added Seam Issue Fix Module

Principle:

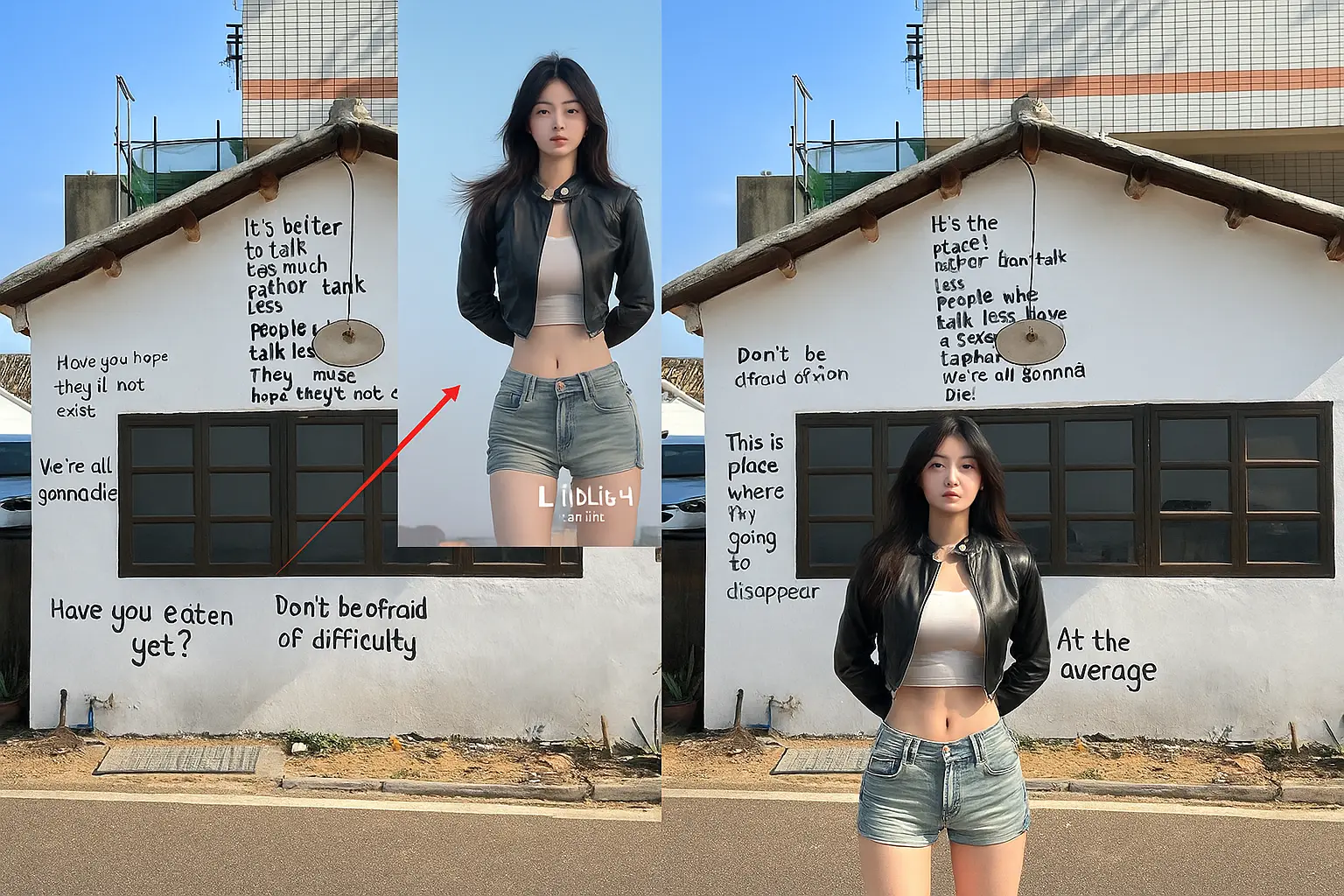

1. Based on the previous FLUX FILL REDUX universal migration process, the ACE model has been added, significantly improving the migration effect;

2. Using the CropAndStitch cutting node, the masked area of the reference image is cropped and enlarged, allowing Redux and the ACE model to have stronger reference power (higher weight) for the details of the reference image, making migration easier.

Similarly, the masked area of the main image is cropped and enlarged to avoid the connection image being too large and sampling slowly, while ensuring sufficient pixels for redrawing (for example, if you are given a small sticky note to draw a beautiful woman versus an A4 sheet to draw a beautiful woman, which one is easier to draw well?). This approach achieves excellent detail, restoration, realism, and proportion, while also being highly efficient and making better use of GPU resources. (This is the core of the entire workflow.)

3. The final redrawn image is stitched back to the main image through the stitch node. You can also use the LayerStyle Mask Clipping V2 by Huluwabro to clip and restore, but I personally prefer using CropAndStitch, as it automatically enlarges, reduces, and stitches after clipping. Additionally, when migrating portrait models to scenes, there are almost no seams, making the result very natural.

4. Previously, text prompts in FILL Redux were ineffective, but with the addition of ACE, the weight of text prompts can be enhanced. You can use the reverse prompt node to generate text prompts based on the image, or manually input your instructions in Chinese to tell it what you want (for example: The person on the left is wearing the clothes of the person on the right? The person on the left is holding the XX object from the right? The person on the right is sitting/standing next to the person on the left... Just try to describe it as much as possible. Here, the main image is on the left, the reference image is on the right, and the redrawing area is also the left side of the connection image).

4. Face swapping, outfit swapping, product swapping, scene model switching, and making two people stand together are all possible. Keep experimenting.

5. When migrating a model to a scene, it is often found that the lighting is automatically adjusted according to the scene, and it looks very realistic.

6. A TTP upscale HD node will be added in the future.

Operation Method:

5. Upload the main image for retouching, right-click the image, select "Open in Mask Editor," and then paint over the parts you want to modify. You can also draw a closed loop to automatically fill the mask. Follow the same steps to paint the area you want to reference in the reference image, then just click start.

6. You can set the number of output images. 1 means 1 image, 2 means 2 images, and 4 means 4 images. The larger the number, the longer the computation time. Generally, 1 image takes about 1 minute. The first run will take longer to load the model. Keep trying until you achieve a satisfactory result (later enhancements will still require multiple attempts).

7. If you have any questions, you can consult VX: FDS LXC

Integration of FLUX Fill Redux ACE Workflow for Universal Migration: Added Seam Issue Fix Module

Principle:

1. Based on the previous FLUX FILL REDUX universal migration process, the ACE model has been added, significantly improving the migration effect;

2. Using the CropAndStitch cutting node, the masked area of the reference image is cropped and enlarged, allowing Redux and the ACE model to have stronger reference power (higher weight) for the details of the reference image, making migration easier.

Similarly, the masked area of the main image is cropped and enlarged to avoid the connection image being too large and sampling slowly, while ensuring sufficient pixels for redrawing (for example, if you are given a small sticky note to draw a beautiful woman versus an A4 sheet to draw a beautiful woman, which one is easier to draw well?). This approach achieves excellent detail, restoration, realism, and proportion, while also being highly efficient and making better use of GPU resources. (This is the core of the entire workflow.)

3. The final redrawn image is stitched back to the main image through the stitch node. You can also use the LayerStyle Mask Clipping V2 by Huluwabro to clip and restore, but I personally prefer using CropAndStitch, as it automatically enlarges, reduces, and stitches after clipping. Additionally, when migrating portrait models to scenes, there are almost no seams, making the result very natural.

4. Previously, text prompts in FILL Redux were ineffective, but with the addition of ACE, the weight of text prompts can be enhanced. You can use the reverse prompt node to generate text prompts based on the image, or manually input your instructions in Chinese to tell it what you want (for example: The person on the left is wearing the clothes of the person on the right? The person on the left is holding the XX object from the right? The person on the right is sitting/standing next to the person on the left... Just try to describe it as much as possible. Here, the main image is on the left, the reference image is on the right, and the redrawing area is also the left side of the connection image).

4. Face swapping, outfit swapping, product swapping, scene model switching, and making two people stand together are all possible. Keep experimenting.

5. When migrating a model to a scene, it is often found that the lighting is automatically adjusted according to the scene, and it looks very realistic.

6. A TTP upscale HD node will be added in the future.

Operation Method:

5. Upload the main image for retouching, right-click the image, select "Open in Mask Editor," and then paint over the parts you want to modify. You can also draw a closed loop to automatically fill the mask. Follow the same steps to paint the area you want to reference in the reference image, then just click start.

6. You can set the number of output images. 1 means 1 image, 2 means 2 images, and 4 means 4 images. The larger the number, the longer the computation time. Generally, 1 image takes about 1 minute. The first run will take longer to load the model. Keep trying until you achieve a satisfactory result (later enhancements will still require multiple attempts).

7. If you have any questions, you can consult VX: FDS LXC